[ad_1]

How Fast Crawling and Indexing Site with Millions of Pages?

Recently, I often hear questions about the rapid embedding in the index of large sites. And today in the chat they threw a link to the fresh millionaire — people-ua.biz.

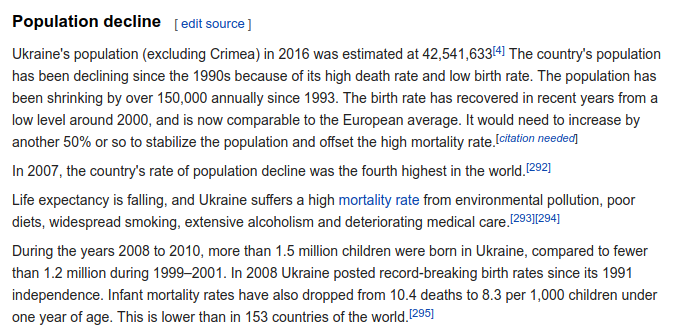

people-ua.biz — database of citizens of Ukraine (population of about 42.5 million). The card page contains a Name, date of birth and residence address. Search can be made on any of this parameter.

We will review the site and analyze how they managed to break into the Google SE index.

To check the response of the server, I recommend using this service.

The domain is registered on October 20, 2016 whois#people-ua.biz:

The sitemap directory contains more than 800 sub-lists for 50,000 URLs each. Total on the site people-ua.biz more than 42,000,000 pages. That is close to the documented number of the population of Ukraine.

So how many pages were able to push to google index for the month of existence?

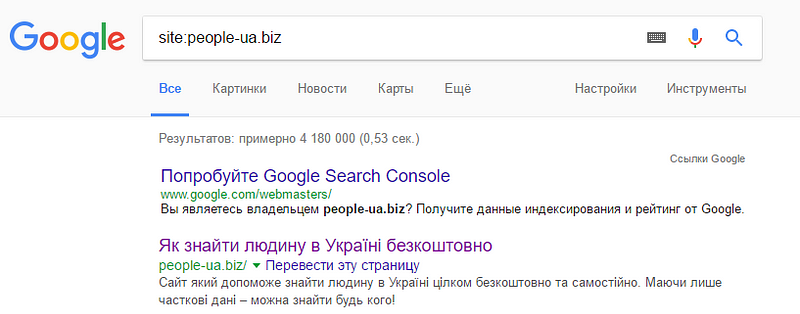

According to Google SERP, on request site:people-ua.biz issues only slightly more than 4 million pages, approximately 700k per week:

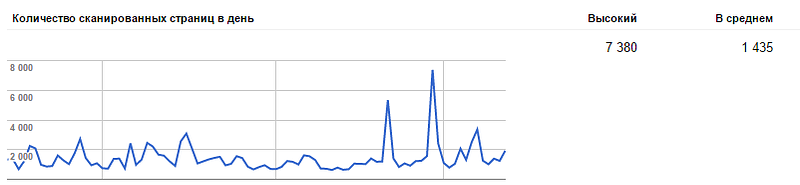

On my projects recorded a 6-fold increase in the number of crawled pages by the google-bot in comparison with the average value after adding a sitemap:

Of these, the index hit up to 98% of the pages in about two weeks.

Your record? How much pages did you get crawled & index by Google-bot? Or, maybe you know other cases?

Bonus tip: One of the tools that will help get your website indexed faster is Page Counter by Sitechecker. This tool will find all the website pages, check their indexing status and detect any technical problems that may be affecting the indexing.

[ad_2]